In this post I present a simple technique that can be quite helpful when you hit the wall during your analysis and don’t know what else to do. The idea relies on cluster analysis a.k.a. clustering. Notably, the implementation of this technique is very simple, but is hard to generalize – it depends very much on case and data. In an an example below I focus on Windows file system cluster analysis that are focused mainly on discovering minor, but grouped changes to the system – usually these are associated with a malware infection.

A typical computer forensics case deals with a tremendous amount of data and whether we analyze it in an automated fashion or walk our way through evidence manually we are pretty much wasting our time until we finally find the first clue. Purists could say here that we are not really wasting our time as we are slowly ruling out the possibilities and they are right, but I hope they will agree that such analysis are not fun. It can be quite frustrating, and more so as we know that finding that first trace of suspicious activity is what will shape further analysis – in fact it is what really kicks off the proper investigation (and this is also where the fun begins :)).

But finding that first piece of puzzle is not a trivial task. For many reasons. If you are lucky you may find malware still running in memory, the evidence has not been contaminated, or the clues are all over the place. In many cases though, the evidence may have been already contaminated by the attackers or ‘helpful’ IT staff that cleaned up the system before the data acquisition. There may be no malware involved at all as the case is dealing with a contraband, fraud, plagiarism or other issues. Also, in many production environment there is a lot changes introduced to the system on regular basis (e.g. via system updates, transaction records, logs, etc.), often saved into separate files generating large amount of small files. Analyzing such systems can be a real nightmare (e.g. a hundreds of thousands files on a single file systems).

Over the years investigators designed many techniques to sift through the data and adapted them into many useful tools and methodologies. We have got Timelines, Filetyping, various filtering, Least Frequency of Occurrence, and many other system- or artifact-specific analysis e.g. these of processes, registry, prefetch files etc.

A typical Windows system is a mess. Operating system files, applications, user profiles, data copied all over the place by users (often many of them), followed by the content of temp folders, cache directories, recycled folders, admin scripts, registry clutter, and production data (if it applies). And lots more.

How to reduce this data into something useful?

One easy way to make some sense of it is by using filtering e.g. by file typing. Files with specific extensions can be grouped together and such groups assessed one by one. This may work, but is also very inefficient. There could be literally hundreds of thousands files of the same file extension. There are also hundreds, often thousands of different file extensions on the system. On top of that, file extension is not necessary indicative of a file content (.exe file doesn’t need to be an executable, and pictures or executable can be stored in files with misleading extensions).

Another way to look at the file system is to realize that file system changes are logically grouped and often happen in an atomic or almost-atomic way i.e. all at once within a short period of time – forming pretty much a sequence of ‘file system updates’. These are clusters we will try to focus on here. These updates often happen on regular intervals and affect only specific paths. Knowing that one can try to build an improved timeline, based not on all timestamps, but on ranges of timestamps.

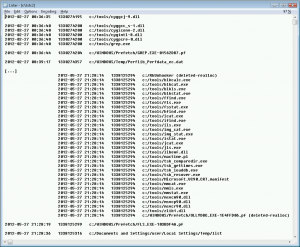

First, an example of a typical timeline is shown below – one can see that I have copied grep.exe to the system together with Cygwin DLLs that are necessary to run the grep.exe (as it was unable to resolve dependencies). I then ran it (as see with a Prefetch files). Next, I copied sleuthkit binaries into a tools directory and ran fls.exe creating a list file that I used for the purpose of this article.

As you can see, the sleuthkit binaries form a nice cluster. The script I used to generate the listing takes into account a number of files created at the same time and if the number of files is higher than 10, it pushes the file list to the right. Under normal conditions, the script wouldn’t need to print them at all, but I do it here for the sake of clarity with a hint to the reader that such a large ‘file update’ may be potentially skipped in analysis. One can easily modify the script to either not show them at all or perhaps apply some more logic before presenting the output. Of course, completely ignoring it is a wrong idea as only by seeing the example above you can tell that I happened to drop some files on the system. Again, depends on what you are looking for, you need to define the criteria that form your clusters and push out of the sight things that are not interesting.

One simple improvement that can be made to the timeline and clustering is timestamp normalization. By normalization I mean a way to glue more timestamps together, even if they do not match perfectly. It can be done in many way, for example: if we assume that the window of atomic operation or almost-atomic ‘file system update’ is e.g. 10 or even whole 60 seconds, suddenly we will be able to increase our ability to assign more entries into a single cluster. More entries in a cluster, more chances for it to fall out of sight.

In my example above, merging the entries that fall within 60 seconds time window makes all grep-related artifacts be fall into one cluster:

The first column is a normalized timestamp (normalized to minutes i.e. 60 seconds), then actual timestamp, then path.

Clustering is a very interesting technique, yet it would seem not widely used. Applied on top of a timeline it could help reduce the amount of data for manual review and most importantly – may immediately highlight suspicious artifacts based on specific criteria. As I mentioned earlier, in the example above I decide to remove from the view all these entries that form the clusters of 10 or more files. This is arbitrary, but doesn’t have to be. It would be certainly helpful in finding malware, as malware rarely drops more than 10 files on the system, yet it would not helpful finding stashed content that has been copied in bulk. A lot more research is needed to find out how to utilize this technique widely and perhaps generate scenarios that can be converted into usable ‘dynamic smart filters’ that can be applied on top of any data.

The following things may be taken into consideration:

- various artifacts (on top of timeline across the whole system)

- various timestamps (one can run cluster analysis for all creation, modification, access, entry creation times)

- various criteria can be used – across file system, or just directory, or directory and subdirectories

- less utilized timestamps e.g. timestamps extracted from Portable Executables – these that stand out need to be looked at (standard system binaries are usually precompiled with a specific timestamp or their range)

- not only timestamps and timelines can be used; e.g. image base of Portable Executables is also a good candidate for finding executables that stand out

The data can be manipulated and analyzed in many ways and ideally there should be an easy way to play with clustering parameters – I am not aware of any interactive tool that could do that in a generic way, but even simple toying around with Excel or a simple script can help here. If you know any free software that already does it, I would appreciate if you let me know.

Last, but not least, before even clustering is applied, one can remove entries using known techniques e.g. clean file hashes, known clean full paths, as well as data obtained from installer analysis extracted from case (run, collect data about file paths, apply as a whitelist), and other data that has a low chance for being interesting (e.g. directories outside of typical scope of system/user activity).

If you want to toy around with the idea, you can download Cluester – an example script that I used for this post.

It can be downloaded from here.

It works on data obtained from running fls on NTFS system:

fls -lrm <drive> -i <type> -f <type> \\.\<drive> > <output> e.g.

fls -lrm f: -i raw -f ntfs \\.\f: > list

Update: 2012-05-28

Fixed typos, grammar mistakes, polished it a bit (that’s what happens when you write posts at 2am ;).