While I am quite critical about the idea of collecting IOCs (Indicator of Compromise) describing various malware, traces of hacking, etc in a form of hashes, even fuzzy hashes, file names, sizes, etc., etc. I do believe that there is a certain number of IOCs (or as I call them: HFA – Helpful Forensic Artifact – as they are not necessary relevant to compromise itself) that are universal and worth collecting. I am talking about artifacts that are common to malware functionality and offensive activities on the system in general as well as any other artifact that may help both attackers and… in investigation (thanks to ‘helpful’ users that leave unencrypted credentials in text files, watch movies on critical systems, etc.).

In this post, I will provide some practical examples of what I mean by that.

Before I kick it off, just a quick reminder – the reasons why I am critical about bloated IOC databases is that they have a very limited applicability in a general sense; and the limitations come as a result of various techniques used by malware authors, offensive teams, etc. including, but not limited to:

- metamorphism

- randomization

- encryption

- data (e.g. strings) build on the fly (instead of hardcoding)

- shellcode-like payloads

- fast-flux

- P2P

- covert channels

- etc.

Notably, antivirus detections of very advanced, metamorphic malware rely on state machines not strings and it’s naive to assume that collecting file names like sdra64.exe is going to save the day…

Anyway…

If we talk about good, interesting HFAs I think of things that:

- are very often used in malware because of a simple fact they need to be there (dropping files, autostart, etc.)

- traces of activities that must be carried on the compromised system (recon, downloading toolchests, etc.)

- also (notably) traces of user activity that support attacker’s work (e.g. a file password.txt is not an IOC, but it’s HFA)

- traces of system being affected in a negative way e.g. if system has been compromised previously by a generic malware, certain settings could have been changed (e.g. disabled tracing, blocked Task Manager, etc.); they are IOCs in a generic sense, but not really relevant to the actually investigated compromise; you can think of it of these aspects of system security that place the system on the opposite side to the properly secured and hardened box; it also included previously detected/removed malware – imho AV logs are not ‘clear’ IOCs as long as they relate to the event that is not related to investigated compromise

If we talk about a typical random malware, it’s usually stupidly written, using snippets copied&pasted from many sources on the internet. The authors are lazy and don’t even bother to encrypt strings, so detection is really easy. You can grep the file or a memory dump of a suspected process for typical autorun strings with strings, BinText, HexDive and most of the time you will find the smoking gun. If the attacker is advanced, all you will deal with is a blob of binary data that has no visible trace of being malicious unless disassembled – that is, a relocation independent, shellcode-like piece of mixed code/data in a metamorphic form that doesn’t require all the fuss of inline DLL/EXE loading, but it’s just a pure piece of code. It’s actually simple to write with a basic knowledge of assembly language and knowledge of OS internals. I honestly don’t know how to detect such malware in a generic way. I do believe that’s where the future of advanced malware is though (apart from going mobile). And I chuckle when I see malware that is 20MB in size (no matter how advanced the functionality).

When we talk about IOC/HFAs and offensive security practices, it is worth mentioning that we need to follow the mind process of an attacker. Let me give you an example. Assuming that the attacker gets on the system. What things s/he can do? If the malware is already there, it’s easy as the functionality is out there and can be leveraged, malicious payload updated and attacker can do anything that the actual payload is programmed to do and within the boundaries of what environment where it runs permits. On the other hand, if it is an attack that comes through a typical hacking attempt, the situation is different. In fact, the attacker is very limited when it comes to available tools/functionality and often has to leverage existing OS tools. This means exactly what it says – attacker operates in a minimalistic environment and is going to use any possible tool available on OS to his/her benefit. If we talk about Windows system, it can be

- net.exe (and also net1.exe)

- telnet.exe

- ftp.exe

but also

- arp.exe

- at.exe

- attrib.exe

- bitsadmin.exe

- cacls.exe

- certutil.exe

- cmd.exe

- command.com

- compact.exe

- cscript.exe

- debug.exe

- diantz.exe

- findstr.exe

- hostname.exe

- icacls.exe

- iexpress.exe

- ipconfig.exe

- makecab.exe

- mofcomp.exe

- more.com

- msiexec.exe

- mstsc.exe

- net1.exe

- netsh.exe

- netstat.exe

- ping.exe

- powershell.exe

- reg.exe

- regedit.exe

- regedt32.exe

- regini.exe

- regsvr32.exe

- robocopy.exe

- route.exe

- runas.exe

- rundll32.exe

- sc.exe

- schtasks.exe

- scrcons.exe

- shutdown.exe

- takeown.exe

- taskkill.exe

- tasklist.exe

- tracert.exe

- vssadmin.exe

- whoami.exe

- wscript.exe

- xcacls.exe

- xcopy.exe

and OS commands

- echo

- type

- dir

- md/mkdir

- systeminfo

and many other command line tools and commands.

So, if you analyze memory dump from a Windows system, it’s good to search for presence of a file name associated with built-in windows utilities and look at the context i.e. surrounding memory region to see what can be possibly the reason of it being there (cmd.exe /c being the most common I guess).

Back to the original reason of this post – since I wanted to provide some real/practical examples of HFAs that one can utilize to analyze hosts, let me start with a simple classification by functionality/purpose:

- information gathering

- net.exe

- net1.exe

- psexec.exe/psexesvc.exe

- dsquery.exe

- arp.exe

- traces of shell being used (cmd.exe /c)

- passwords.txt, password.txt, pass.txt, etc.

- data collection

- type of files storing collected data

- possibly password protected archives

- encrypted data (e..g credit cards/track data)

- various 3rd party tools to archive data:

- rar, 7z, pkzip, tar, arj, lha, kgb, xz, etc.

- OS-based tools

- compress.exe

- makecab.exe

- iexpress.exe

- diantz.exe

- type of collected data

- screen captures often saved as .jpg (small size)

- screen captures file names often include date

- keystroke names and their variants

- PgDn, [PgDn],{PgDn}

- VK_NEXT

- PageDown, [PageDown] {PageDown}

- timestamps (note that there are regional settings)

- predictable Windows titles

- [ C:\WINDOWS\system32\notepad.exe ]

- [ C:\WINDOWS\system32\calc.exe ]

- [http://google.com/ – Windows Internet Explorer]

- [Google – Windows Internet Explorer]

- [InPrivate – Windows Internet Explorer – [InPrivate]]

- possible excluded window class names

- msctls_progress32

- SysTabControl32

- SysTreeView32

- content of the address bar

- attractive data for attackers

- regexes for PII (searching for names/dictionary/, states, countries, phone numbers, etc. may help)

- anything that matches Luhn algorithm (credit cards)

- input field names from web pages and related to intercepted/recognized credentials

- user

- username

- password

- pin

- predictable user-generated content

- internet searches

- chats (acronymes, swearwords, smileys, etc.)

- type of files storing collected data

- data exfiltration

- who

- username/passwords

- how

- ftp client (ftp.exe, far.exe, etc.)

- browser (POSTs, more advanced: GETs)

- DNS requests

- USB stick

- burnt CD

- printer

- how

- just in time (frequent network connection)

- ‘coming back’ to the system

- configuration

- file

- registry

- uses GUI (lots of good keywords!)

- where to:

- URLs

- FTP server names

- SMTP servers

- mapped drives (\\foo\c$)

- mapped remote paths (e.g. \\tsclient)

- who

- malicious code

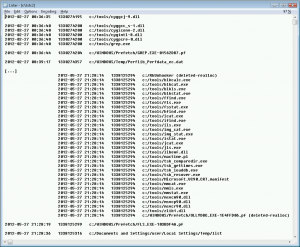

- any .exe/.zip in TEMP/APPLICATION DATA subfolders

- processes that have a low editing distance between their names and known system processes (e.g. lsass.exe vs. lsas.exe)

- processes that use known system processes but start from a different path

- areas of memory containing “islands” with raw addresses of APIs typically used by malware e.g. CreateRemoteThread, WriteProcessMemory, wininet functions

- mistakes

- Event logs

- AV logs/quarantine files

- leftovers (files, etc.)

Many of these HFAs form a very managable set that when put together can be applied to different data sets (file names, file paths, file content, registry settings, memory content, process dumps, etc.).

In other words – instead of chasing after a sample/family/hacking group-specific stuff, we look for traces of all these things that make a malware – malware, a weak system – weak, a hack – hack and attack-supporting user – victim.